Hi there!

Little did I know that knowing how to work with data would change my life forever. Within just a few months diving into this world, I discovered an incredible power at my fingertips—the power to quantify and comprehend virtually anything I set my mind to: the power of data.

Summary

Understanding the quantification of the everyday

From the number of thrash bags I use per week to the fluctuating prices of the milk. My ability to work with technology, particularly data, has enabled me to quantify these mundane things, understand what my routine is That’s only possible because I know how to work with technology (specially data).

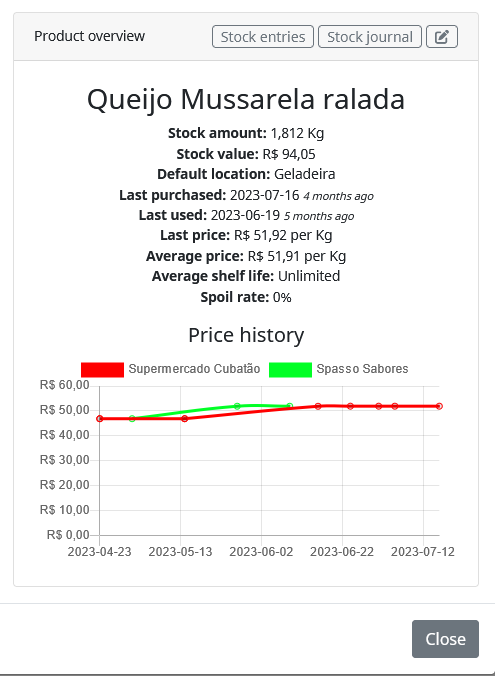

In recent months, I’ve been dedicated into gathering data about the usual thing of my life. This is possible through a simple mini-computer like an Intel NUC (I use a Beelink Mini S N5095) and an open-source software called Grocy.

With Grocy, I can input information about my purchase prices for each product and even track the consumption of products, providing insights into the household demand for those items.

However, one downside to using Grocy is that the data entry has to be done manually. It can be tempting to skip entries on busy days, but that’s where my knowledge of automation comes into play.

Automating things

If you know the minimum about working with data, you’re likely familiar with Python. Python is what I use the most for automating tasks.

From finding groceries data using the EAN to populating my personal data warehouse with calendar data, Python enables the automation of various tasks. Everything is possible and sometimes necessary if you consider that the more personal services you host and the more data you store, the more time investment is required.

However, it’s important to note that investing in a robust infrastructure isn’t a prerequisite. This mindset often discourages people from having their personal services. The process doesn’t have to be complex, such as setting up a dedicated server or orchestrating with tools like Apache Airflow. Always remember that the simpler the solution is, the more likely you (not just as a developer, but also as a user) are going to stick to the process.

I mean, it’s always better to automate the most you can, including the execution, but if you think about it, if you don’t have a dedicated server, it doesn’t mean that you cannot automate processes. The execution stage is the least time-consuming part of the process. The main focus should be on the time saved by the instructions that the script executes (for personal purposes, of course).

Data Scraping

There are a few ways and motivations to scrape data. I’ve scraped data from PDFs for an academic research, from real estate agencies to compare what the best option available in a given region and budget. The way I see is that there are two paths when you have the need to have that data to yourself: the simplified and the hard way.

1. Simplified data scraping

The simplified way of scraping applies when you just want to pre-check a conviction, a single key indicator. If the data is on the web, I suggest using a browser extensions. Here is the suggestion:

- Web Scraper (you can find both on Chrome and Firefox)

With this web scraper, you can interact with the page, create loops, pagination, get information from inside a link. Everything is doing interacting with the browser. Then, after setting it up, you just run and export the data in an excel file.

This was very useful when I as searching for an apartment. Every real estate agency had his own website, with different layouts and it was so exhausting to find the information I wanted to better compare the options like the number bedrooms and restrooms, the square meter, the location, the price. After the scrape I had an excel file full of information so I could make the best possible decision. And best of all, all based on data.

2. The hard way of data scraping

The hard way is, of course, using a programming language. In my case, Python. As a data analyst, you would be able to use also other programming languages such as R, that is too very powerful.

The implications of this way is that is harder to set it up everything you want, but when you make it, you can schedule it, run it browser independently. This means that you can scrape anythings, not only content that is online. This was very useful when I was writing my graduation work, where most of the information was not online, but in PDFs. And some of the PDFs were not even digitalized, meaning that I’d need to use python to extract the text based on machine learning. This would not be possible with a simple solution, because the data was not in an easy access.

In short, with this way, you have control of everything.

Conclusion

Through my experiences, I’ve realized that the true essence of working with data lies not only in its technical matters, but also in our capacity that we perceive and interact with the world around us. We all have our own daily routine, unique family setups, and live in different places. That’s what makes working with data on a personal level so fascinating – it’s like discovering a whole new world just for you. Plus, it’s an opportunity for those who have always been on the tech side to step into the shoes of a client, a business analyst or a scrum master and see things from a completely different angle.

Leave a Reply